The seismic wave of generative AI strictly divides winners and losers.

Nvidia, the Santa Clara-based chip giant, is on a tear: its stock price has more than tripled from over a year ago, its market cap has soared to $2.7 trillion to become the world’s second most valuable company, and its revenue is up 262 percent on year in the latest quarter.

On the other side of the continent, Korean chipmaker Samsung Electronics has been struggling: its stock has been stuck at 70,000 won ($51) or below for three years save for spotty spikes only lasting for several days, while last year’s losses in excess of $10 billion turned to a relatively modest $1.3 billion profit in the first quarter.

The Suwon, Gyeonggi-based company resorted to replacing a top semiconductor executive earlier this month, a move widely considered a dismissal for poor results.

At the heart of Samsung’s downbeat performance is its failure to maintain technological supremacy in advanced chips — whether it be processors or commodity-like memory chips.

Those high-performing chips, stacked in servers at data centers, are responsible for training and accelerating the workloads of different types of language models and AI systems like the family of OpenAI’s GPT.

At home, rival SK hynix is riding high, with its stock price linked with the rise of Nividia as one of the U.S. company’s key suppliers.

This edition of the Explainer delves into the multilayered factors behind the recent downfall of Samsung in the AI chip competition.

![Samsung Electronics' fifth-generation HBM3E chips, which have not yet been mass-produced [SAMSUNG ELECTRONICS]](https://koreajoongangdaily.joins.com/data/photo/2024/06/05/b9b70679-6097-4a06-8e1e-6af6c4b53b35.jpg)

Q. Why the stark difference?

A. Nvidia and Samsung Electronics are both in the chip business, but their main products are different, which translates into a divergence in earnings. The majority of Nvidia’s revenue comes from graphics processing units (GPU) or processors tailored for data centers such as the A100 and H100.

One advantage of processor sales is that the product type is less sensitive to cyclical ups and downs compared to memory chips like dynamic random access memory (DRAM) and NAND flash, which are Samsung’s primary items.

Last year witnessed a severe cyclical downturn, which prompted memory-oriented Samsung and SK hynix to hemorrhage billions of dollars in operating losses.

Processor-oriented chip giants like Nvidia, AMD and Intel, however, all posted profits, although the lion’s share clearly went to Nvidia.

Nvidia’s outsized influence in the segment is attributed to its processors’ galloping speed and performance as well as a well-developed ecosystem that helps lock in millions of developers with a more than 80 percent market share.

Owing to the dominant position, the price of the H100 has skyrocketed to around $40,000, but the latest memory chips fitted in the H100, known as high bandwidth memory (HBM), only cost a tenth of that, according to an insider in the Korean semiconductor industry.

Is the product portfolio difference the only reason?

Samsung has its own share of problems in advancing its semiconductor manufacturing process and yields rates. In the memory chip sector, it used to be the undisputed leader, but the tide started turning around the early 2020s when it adopted next-generation chip lithography equipment called extreme ultraviolet (EUV).

Chip manufacturing with EUV machines requires a far higher level of sophistication, but the Korean company has faced trouble meeting a timeline in road maps for EUV-manufactured chips.

For instance, Samsung Electronics has not yet mass-produced any HBM chips using the fifth-generation manufacturing process, but SK hynix and Micron have.

In the non-memory sectors like the foundry or contract chipmaking businesses, Samsung is losing ground to Taiwan’s TSMC. In the foundry segment, big clients like Apple, Nvidia and Qualcomm chose TSMC over Samsung as the Taiwanese manufacturer is known for its high yields for the chips it produces, a critical quality measurement in the business.

![SK hynix promotes its supply contract with Nvidia at this year's GTC conference, which was held by the U.S. company. [YONHAP]](https://koreajoongangdaily.joins.com/data/photo/2024/06/05/e874ab1d-763a-4129-926b-a006497db998.jpg)

Why the contrasting fate between Samsung and SK hynix?

Misreading the trend led Samsung Electronics to lose the lead in the hot battle over HBM, a premium type of DRAM chips considered essential for AI processors.

While SK hynix, after developing the world’s first HBM chips in 2013, persistently devoted resources to the fledgling technology, Samsung Electronics dropped its HBM research team around 2018 after concluding that its niche market didn’t hold as much potential.

“The reason behind Samsung’s struggle in HBM chips now can be narrowed down to its leaders and people,” said Greg Roh, managing director at Hyundai Motor Securities. “When they should have focused more on HBMs, they disbanded the team and its valuable researchers left for other companies.”

SK hynix, on the other hand, maintained its high stakes, which helped the company clinch an early HBM deal with AMD before the major supply contract with Nvidia.

Its first-generation HBM was supplied for AMD’s Fiji GPU series in 2015, and the second-gen was fitted in AMD’s Vega GPUs. Although the contract was not as big as the one with Nvidia, it provided an opportunity to improve its chipmaking and comparability capabilities with GPUs.

SK hynix continued with the research until releasing the fourth-generation HBM3 in 2022 and began supplying it for Nvidia’s H100 processor, which launched the same year.

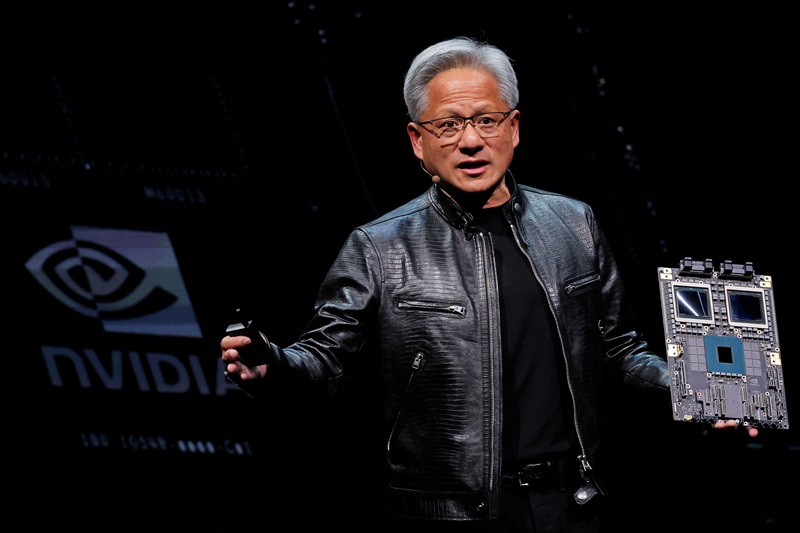

SK hynix remains the largest supplier of the HBM-range chip to Nvidia, while Samsung has not landed a notable deal, although Nvidia CEO Jensen Huang recently confirmed that tests are underway.

The two chipmakers’ stock prices reflect their asymmetrical performances.

The electronics giant gained a mere 8 percent over the past year since June 2023.

At the same time, SK hynix gained 79.2 percent, following a similar trajectory to Nvidia, which rose by a whopping 197.3 percent during the cited period, triumphing in the AI boom.

Can Samsung rebound?

It depends on how quickly Samsung Electronics improves its manufacturing process to produce the next generation of DRAM chips that have an upper edge in performance and price competitiveness compared to those of rivals.

“It seems that after the sixth generation of DRAM or its 1c [11 to 12 nanometer] process, Samsung Electronics will have a chance to take back the lead in HBM,” said an industry insider who wished to stay anonymous.

“When DRAM performance gets improved and the HBM product, which is a package of these DRAMs, is able to attain a better performance than those of its rivals at the same cost, then Samsung Electronics will have a chance.”

The electronics giant is ramping up efforts to reclaim its unrivaled lead in the memory chip race.

It is betting heavily on what it expects to be the next jackpot in the AI game, determined to not repeat what happened with HBM.

One of its bets lies in what’s called Compute Express Link (CXL), which is an interface that connects processors like GPU with DRAMs for faster and more energy-efficient data processing. Its scalability, as well as its capability to pool data to enable a processor requiring more memory depending on the type of computation in progress to access multiple memory chips, has emerged as the next star of the AI era.

Samsung has grabbed an early lead, having developed the industry’s first CXL-based DRAMs in 2021 and successfully verifying the technology with Red Hat’s operating system last year.

Theoretically, CXL could significantly expand memory capacity cost-effectively, although its marketability has not yet been proven.

This rising star may be able to aid high computation, according to industry experts, but will not replace HBM because the technology is not yet deployed by Nvidia.

“CXL availability is the main issue as Nvidia GPUs don’t support it,” a report from chip research firm SemiAnalysis said.

But then again, there is no company that has as deep of a talent pool and capital as Samsung, which is why once it plots a course, its catch-up will be formidable.

Some 10 talents from global technology companies like Google, Apple, Meta and AMD have recently joined Samsung Electronics as executives, according to the company’s electronics disclosure last year.

It also spent 48.4 trillion won on its chip business last year, an inch up from 47.9 trillion won the previous year, despite enduring one of the worst cyclical downturns in history.

BY PARK EUN-JEE, JIN EUN-SOO [park.eunjee@joongang.co.kr]