Since starting to invest in OpenAI in 2019, Microsoft has secured a 49% stake by investing a total of $13 billion, including $10 billion early last year. The result? A huge success.

As everyone knows, Microsoft has secured technological leadership in the era of generative AI and has become the most talked-about company, even reaching the top market capitalization early this year. They continue to research their own AI models and are set to release small language models (SLMs) this year.

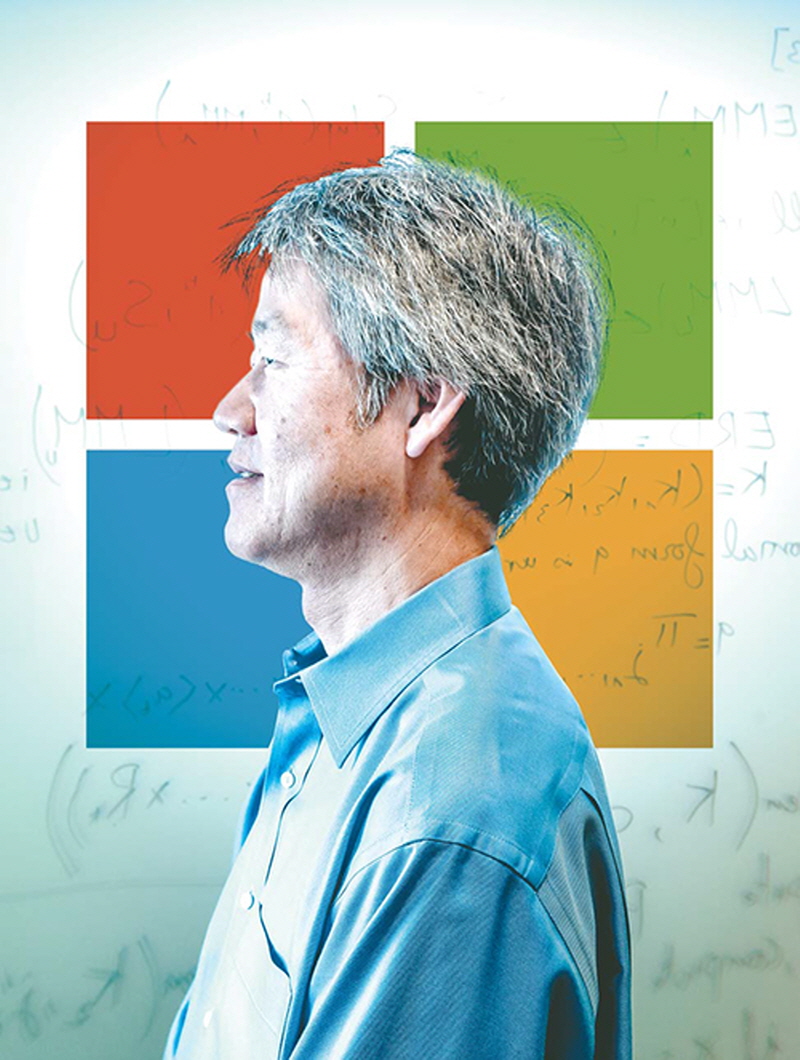

Peter Lee, President of Microsoft Research, is the key figure behind the company’s advancement in the generative AI era. He led the internal technical review of OpenAI and spearheaded the development of the company’s own AI models.

Satya Nadella, CEO of Microsoft, even referred to him as the “secret weapon.” Born in the United States in 1960, Korean-American Lee graduated from the University of Michigan and worked as a professor of computer science at Carnegie Mellon University from 1987 to 2008.

He joined Microsoft in 2010 and has been leading the research unit. In 2016, he also served on the President’s Commission on Enhancing National Cybersecurity created by former U.S. President Barack Obama.

The JoongAng Ilbo, a leading Korean newspaper affiliated with the Korea Daily, interviewed Lee to discuss the future of generative AI and the impact of multimodal AI technology on our lives.

1. AI on farms? Changes brought by SLMs

The atmosphere in the AI industry, which until last year was focused on “bigger is better” and the race to grow large language models (LLMs), has started to change this year. Microsoft, which has been leading the generative AI craze, pointed out SLMs as a trend to watch this year. Lee’s unit, known as the “secret weapon,” is leading SLM research within Microsoft. Why does he believe small models are necessary?

– Why are you emphasizing SLMs recently?

“SLMs are useful in situations where there is no connection to the cloud, where inference is limited to local devices (like smartphones), or when quick responses are crucial. They are also beneficial for reducing costs when only simple tasks need to be performed.”

– Specifically, in what fields can we expect changes through SLMs?

“SLMs will allow easy access to AI in areas where it was previously difficult due to cost issues. Agriculture is a prime example. Our lightweight Phi model, an SLM, can operate on individual devices rather than the cloud, making it particularly useful in farming scenarios where AI assistance is needed. With the rising costs of AI, cost control has become a major topic among LLM developers like Microsoft and Google. They are realizing that not every AI service needs an expensive, large LLM. Focusing on SLM follows this line of thinking. It’s like not needing a Michelin chef to cook ramen.”

– Last year, you declared the development of SLMs that are smaller and more cost-efficient compared to OpenAI models. Then, in April, you announced the Phi-3 SLM family.

“Phi-3 focuses on inference and problem-solving abilities. Microsoft Research has innovated data curation to enable Phi-3 to outperform models ten times larger in these tasks. This represents Microsoft’s AI leadership, creating a language model that works on devices like laptops and smartphones as well as the cloud.”

– How do you see it synergizing with existing businesses?

“Microsoft has added various AI models, including OpenAI models, to Azure (cloud service) to allow customers to choose according to their needs. Phi-3 is a significant milestone in this strategy. Previously only LLMs were an option, but now customers can select the most suitable model based on size and cost, thus implementing new features more efficiently and effectively.”

2. Multimodal Competition: Microsoft’s Strategy

Since Google unveiled its multimodal model Gemini 1.0 last December, capable of processing text, voice, images, and video, the multimodal AI era has begun. OpenAI and Microsoft have also released multimodal models.

– How does Microsoft plan to secure a competitive edge in the multimodal AI sector?

“We have high hopes for using multimodal AI in medical and scientific discoveries. Microsoft Research is collaborating with global experts to study how generative AI can synthesize and integrate various types of data related to proteins. This has allowed us to rapidly discover new molecules for solid-state batteries and tuberculosis treatments.”

Last month, Microsoft unveiled GigaPath, an AI model for digital pathology, pre-trained on real data, in collaboration with the U.S. nonprofit medical network Providence Health System. GigaPath can assist in diagnosing and analyzing cancer by examining digital images of tissue samples taken from patients.

– Which yet-to-be-widely-applied fields do you believe AI can be actively used?

“AI is a foundational technology that can impact almost everything we do. Ironically, this makes it harder to imagine specific applications.”

– It means AI can be applied to all areas of our lives and industries. Are there any particularly prominent fields?

“The two most exciting fields are healthcare and education. In healthcare, AI has already progressed to automating note-taking and administrative tasks for doctors and nurses. More companies are introducing new AI-based products designed to enhance the conveniences and productivity of doctors and nurses.”

Lee is a member of the National Academy of Medicine. This year, TIME magazine selected him as one of the 100 most influential people in health. An AI engineer with a keen interest in medical research, Lee appeared on a Microsoft podcast in April, stating that it’s crucial for AI to enable doctors to maintain eye contact and be present with patients instead of typing on laptops. He added that when GPT-4 wrote emails to patients, they were rated as more human than those written by doctors. This suggests that AI can play a significant role in improving communication between doctors and patients, learning from data that empathy is a core part of medicine.

OpenAI CEO Sam Altman, left, shakes hands with Microsoft Chief Technology Officer and Executive VP of Artificial Intelligence Kevin Scott during the Microsoft Build conference at Microsoft headquarters in Redmond, Washington, on May 21, 2024. [Yonhap]

3. The need for open source despite “Nuclear Weapon” criticism

Last year, Meta allowed the commercial use of its open-source AI model Llama2, which had significantly upgraded performance compared to Llama1. However, open-source AI is a subject of debate. There is optimism that it could accelerate AI development through active competition and pessimism that it could fuel AI misuse. Jeffrey Hinton, a University of Toronto professor emeritus known as the “godfather of AI,” compared open-source AI to “open-source nuclear weapons” in an interview with us last year.

– The technological advancements of open-source AI have been rapid since Llama2. What are your thoughts on this?

“Microsoft Research believes that publicly posting research democratizes access to knowledge and technology, ultimately allowing more people to use AI. Our publications of papers and the distribution of Phi-3 are examples of this.”

– Phi-3 is not an open-source model, is it?

“We disclosed the technological principles and model weights that underpin its creation. Additionally, we released Orca, an SLM, and AutoGen, an open-source tool to help developers build complex LLM-based applications.”

– Does this mean you support open-source AI?

“Despite the risks of open-source models, having a diverse ecosystem with multiple distribution options, including open source, is beneficial. We need a variety of options, including open-source models.”

4. AI capable of inference?

– What are the current trends in generative AI research?

“There are three main trends. Firstly, developing new AI model functions, such as SLMs and domain-specific models, and creating multimodal models capable of inferring various data types, like medical images or protein structures. Secondly, “AI agents,” where agents collaborate as a team under human supervision to solve problems beyond the capabilities of a single AI system. Lastly, using AI in science, including drug discovery and material science.”

– Will AI eventually be able to predict and infer?

“The question of whether AI systems can infer and how they do so is a central debate in computer science today. LLMs are likely to continue outperforming on specialized benchmarks, but such tests are limited. These benchmarks have proven inadequate for capturing the full strengths of models. In many ways, we are at a technological turning point similar to electricity before the invention of the light bulb.”

-So, do you believe inference is possible?

“OpenAI’s GPT-4 can score top marks on high school exams in science and humanities and pass professional exams like the medical license exam. But does this demonstrate inference ability? It’s still a difficult question. Capabilities like complex mathematical calculations, planning, or active learning through interaction are currently challenging. However, I believe future AI systems will achieve these capabilities.”

BY YUJIN KWEN, YOUNGNAM KIM [kwen.yujin@joongang.co.kr]